AI-powered test generation and multi-turn conversation simulation, plus review workflows for cross-functional teams, so you catch issues before production.

Legal, PMs, and domain experts capture requirements in plain language. Rhesis turns them into realistic test scenarios and a review flow, so teams spot failures early & agree on what “good” looks like.

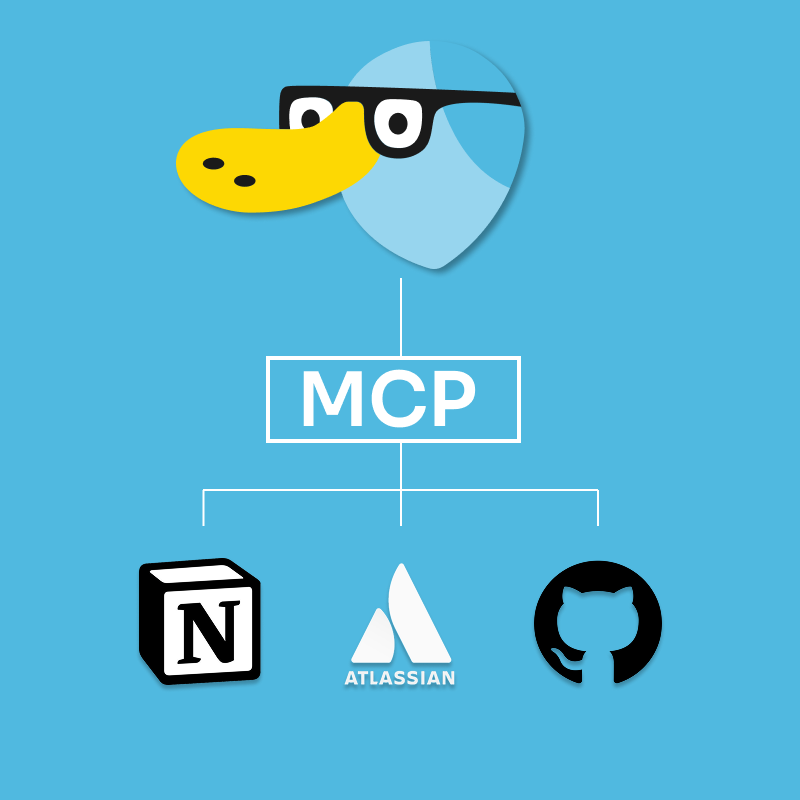

Integrate Rhesis directly into your development workflow. Generate, run, and analyze tests from code, then sync results back to the platform for review. Fewer context switches, safer releases.

From 'I hope this works' to 'I know this works.' Everything you need to develop and ship with confidence instead of crossed fingers.

Automated scenario creation at scale

Domain-specific testing intelligence

Real-world simulation engine

Clear insights, actionable results

Works with your existing stack

You spent weeks and months building something cool. Don't let sloppy testing ruin the release. Your Gen AI deserves testing that's as thoughtful as your architecture.

Great AI teams know what they're shipping before users do. Let's turn testing from "crossing fingers" into something as sophisticated as your development process.

Our API and SDK work with any Gen AI system, from simple chatbots to complex multi-agent architectures.

Your team defines what matters: legal requirements, business rules, edge cases. We automatically generate thousands of test scenarios based on their expertise.

Set quality benchmarks that actually matter to your team. Track performance, safety, compliance, and user experience with clear analytics.

Receive detailed analysis that help you understand exactly how your Gen AI performs before your users do.

Everything you need to know about Rhesis AI, served with a smile.