This year, I spent a considerable amount of time attending AI events. Meetups, conferences, hackathons, you name it. At some point, the conversations started to blur together: someone would ask what I do, I'd explain what we're building at Rhesis AI, and then the same question would land, almost every single time:

"Wait, so you use AI testing AI? How do you know that AI is accurate?"

Sometimes it was curious. Sometimes, it was skeptical. Occasionally, it had the vibe of, "Gotcha."

That question stuck with me because it reveals a fundamental misunderstanding about what LLM evaluation actually looks like in practice.

Let's start with the obvious: manual testing doesn't scale. If you're building any kind of LLM application, whether it's a customer service chatbot, a content generation tool, or a complex multi-agent system, you need to test it against thousands of scenarios. Edge cases, adversarial inputs, multi-turn conversations, domain-specific queries, policy violations, safety concerns.

Writing these test cases by hand? That's weeks of work. Running them manually? That's months. And by the time you're done, your model has been updated three times, your requirements have changed, and you're back to square one.

This is exactly why we need AI testing AI. Not because it's trendy or because we want to build some recursive AI ouroboros, but because it's the only approach that scales with the complexity and pace of modern AI development.

When people ask, "How do you know the AI tester is accurate?" they're imagining some black box system where one AI blindly judges another. That's not how sophisticated LLM evaluation tools like Rhesis work.

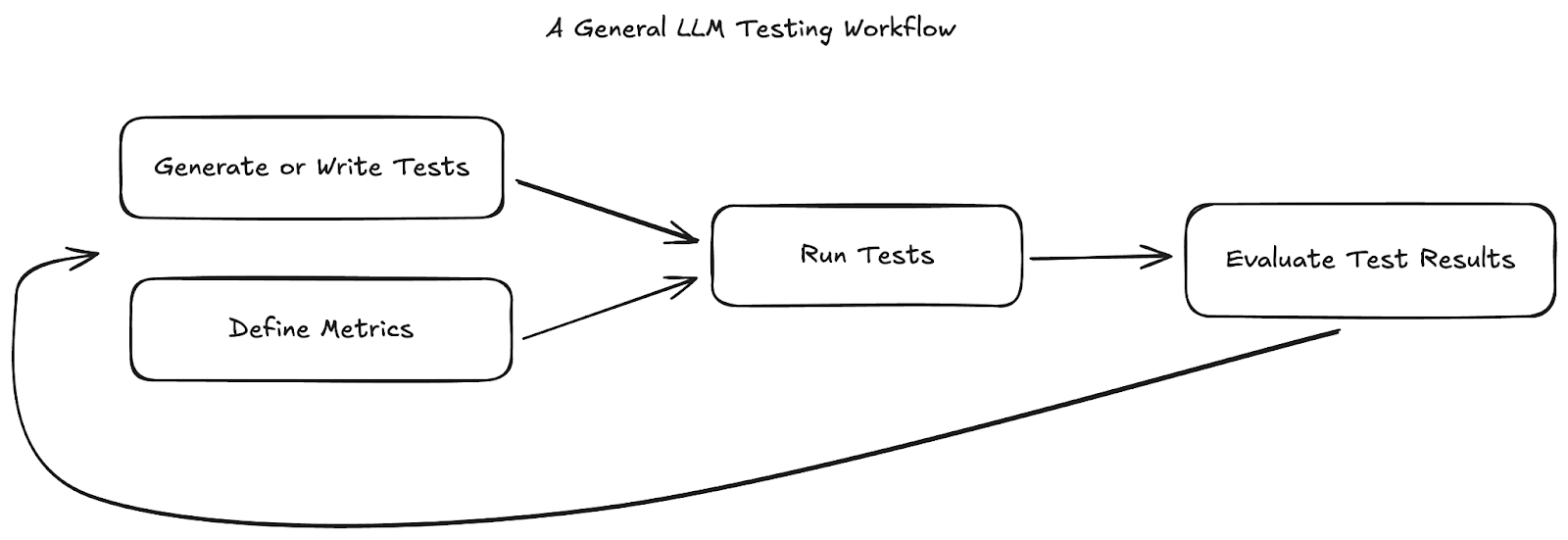

Here's what a proper AI testing methodology looks like:

The issue with generic LLM-generated test cases is that they overlook edge cases, reflect biases that leave gaps in coverage, produce redundant or overlapping scenarios, misinterpret domain-specific context, and struggle to capture rare or extreme situations. Rhesis tries to mitigate this by taking a structured approach, turning high-level requirements into comprehensive and varied test scenarios.

With Rhesis, you define:

These structured inputs feed Rhesis’s synthesizers. Adequate prompting plays a key role here: well-crafted prompts tell the synthesizer exactly what kind of scenarios, edge cases, or behaviors to produce, ensuring that generated tests are relevant, diverse, and cover realistic user interactions. You can further improve coverage by adding knowledge: uploading relevant files or integrating your knowledge systems via MCP, giving the model richer context to produce tests that truly reflect your domain and data.

But even with a more structured approach, AI-generated test cases have similar limitations as with a non-structured approach. This is why it is important to supervise and review synthetic test cases, continuously assess their coverage and relevance, and iteratively create or refine new test cases to address gaps, edge cases, or evolving requirements.

One of the biggest challenges in testing LLMs is that their safety guardrails often prevent them from generating truly malicious or edge-case inputs.

Rhesis addresses this with Polyphemus, an adversarial test generator that produces unsafe scenarios, jailbreak attempts, and policy-violating prompts that commercial LLMs normally refuse to generate. This allows you to see how your conversational AI behaves under high-risk scenarios and improve robustness before deployment.

Real users don't ask one question and stop: they engage in conversations. They ask follow-ups, reference earlier messages, and often introduce ambiguity. Advanced AI testing platforms simulate this behavior through multi-turn conversations, evaluating whether your system maintains context, responds consistently, and achieves intended goals across extended interactions.

Rhesis handles this with a specialized AI agent called Penelope. You configure Penelope with a clear goal (what success looks like), optional instructions (how to achieve it), scenario (user role or context), and any restrictions (forbidden behaviors). Penelope then autonomously drives a conversation with the system under test. In each turn she reasons about what to ask or do next (using tools if needed) to reach the goal.

Because Penelope is an agent, the test plays out like a full dialogue. Rhesis logs every exchange: Penelope’s internal reasoning, each user message and assistant response, and any tool calls. After every turn, she evaluates progress toward the goal and stops when the objective is met or a turn limit is reached. This produces an execution trace capturing the entire conversation history and outcome.

For more details on how Penelope works and how to set up multi-turn tests, see the Rhesis Penelope documentation.

This is where the "how do you know it's accurate" question gets answered. Professional AI testing doesn't rely on subjective AI judgment.

Instead of asking human reviewers to make inconsistent judgment calls, you can define metrics upfront (objective criteria like correctness, safety, or policy compliance). Rhesis, for instance, provides two types of metrics; Generic, off-the-shelf metrics (derived from frameworks like DeepEval or Ragas) assess general qualities such as fluency, coherence, and overall relevance. But you might want to define your own custom metrics, which are tailored to the specific context of an industry, company, team, or application. These metrics are implemented as LLM-as-a-judge evaluators that score every test response automatically against the same standards. This provides consistent and repeatable evaluation and drastically reduces the workload for teams. Human evaluators are not eliminated: their focus shifts on refining the metrics themselves and handling edge cases.

To explore the full range of metrics supported by Rhesis, or how to set up custom metrics, check out the documentation for the SDK or the platform.

The stakes for AI testing are getting ever higher. When your chatbot provides incorrect information, it's not just a bad user experience; it's also a potential source of legal liability, regulatory compliance issues, brand damage, and operational failure, potentially leading to financial losses.

"Test it manually and hope for the best", aka, “vibe-testing” simply doesn't cut it anymore. You need:

Humans aren't eliminated from Rhesis. They're elevated.

Instead of spending time on the tedious work of writing individual test cases and manually reviewing hundreds of responses, human experts focus on:

Rhesis handles the execution at scale, providing a platform where each test run captures the metrics, results, and reviewer comments. Humans handle intelligence and oversight.

So the next time someone asks, "How do you know the AI is testing the AI accurately?" here's the answer: A robust testing methodology doesn’t blindly rely on the AI. Instead, it incorporates human-in-the-loop evaluation: using transparent LLM-as-a-judge metrics to score test results, reviewing and interpreting the outputs, and tracking everything through audit trails. This process allows teams to iteratively refine tests, metrics, and evaluations until the results are reliable and trustworthy.

The question isn't whether to use AI to test AI; rather, it is whether to use sophisticated, purpose-built frameworks like Rhesis or continue relying on manual approaches that can’t keep pace. Many teams still rely on messy, not-versioned spreadsheets to track test cases and review results, creating confusion, inconsistent evaluations, and no clear audit trail: problems that scale poorly as your AI systems grow.

Engineers, product managers, and domain experts work together on the same platform, defining tests, reviewing results, and refining metrics. Everyone has visibility into what's being tested and why. No more testing in silos, no more knowledge trapped in one person's head.

Ready to see how this works in practice?