We had a problem with Rhesis. The platform could register REST endpoints for testing LLM applications, but the process was painful. Developers had to manually configure endpoints through our UI, mapping request parameters and response fields. Getting the mappings right was tedious and error-prone. One wrong JSONPath expression and the endpoint wouldn't work.

We needed something simpler. Developers shouldn't have to think about request mappings and response transformations. They should just point at their functions and say "test this."

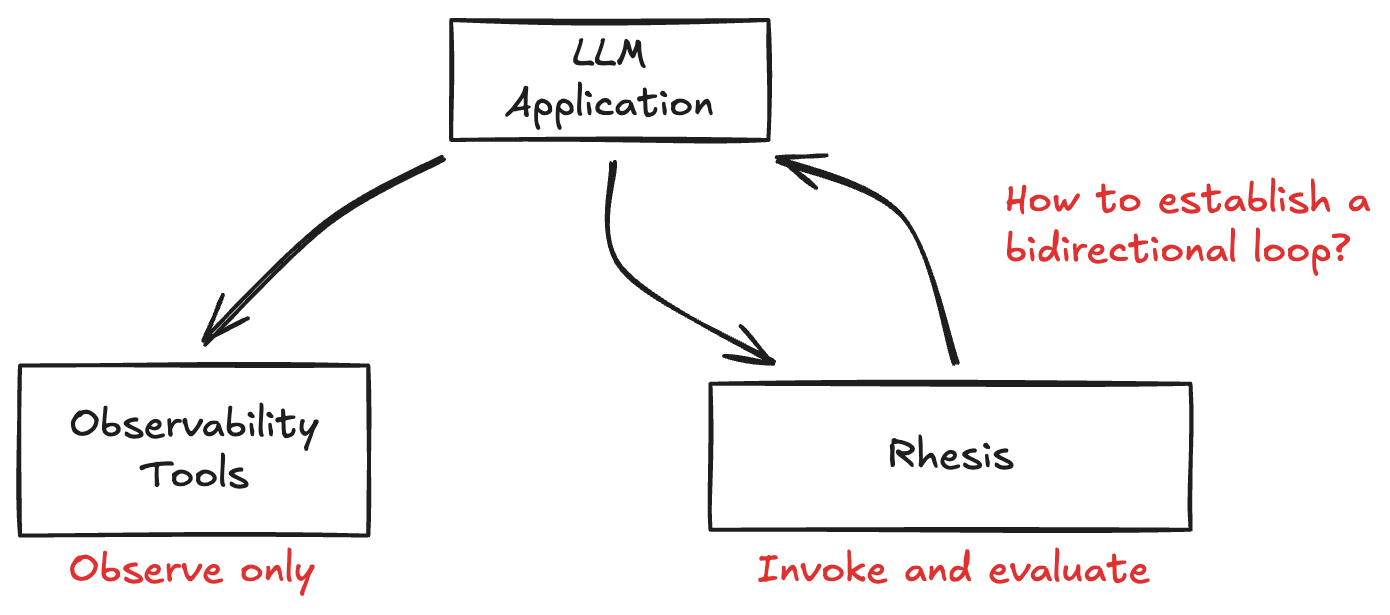

We looked at how observability tools handle this. Tools like OpenTelemetry make instrumentation easy, you decorate your functions and telemetry flows to the platform automatically. But there's a fundamental difference: observability tools work in one direction. They send data about traces to an endpoint. That's fine for observability, where you're collecting data about production traffic that's already happening.

For Rhesis, one direction isn't enough. We don't just observe production traffic, we need to trigger test runs on demand. When a developer configures a test, we need to invoke their LLM function with specific inputs, collect the output, and evaluate it. We can't wait for the right production request to come in. We need bidirectional control.

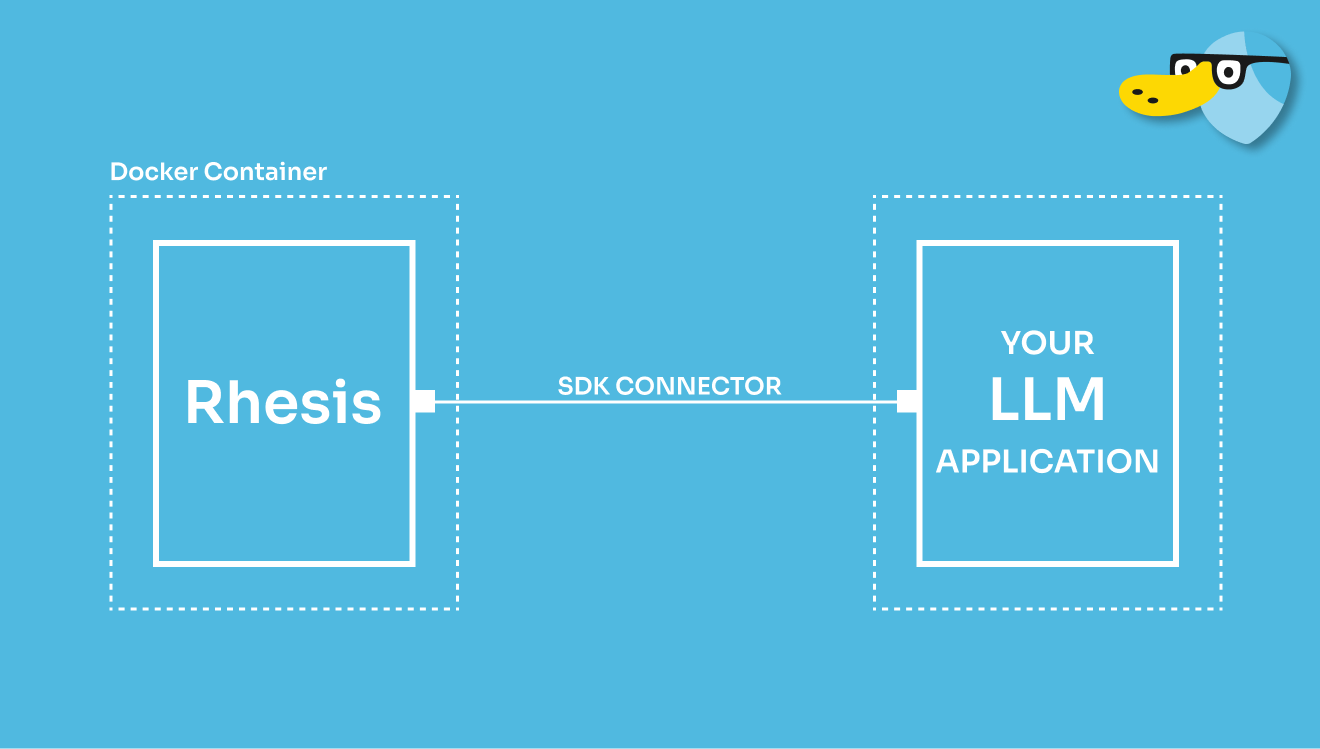

So we built the connector. A simple Python decorator that automatically registers functions with the platform and establishes a persistent WebSocket channel. Developers decorate their functions, and Rhesis can trigger them whenever a test is needed. No manual endpoint configuration. No mapping errors.

A fortunate side effect emerged: the same bidirectional channel that enables testing could also support observability scenarios. But we're getting ahead of ourselves. This is the story of how we built it.

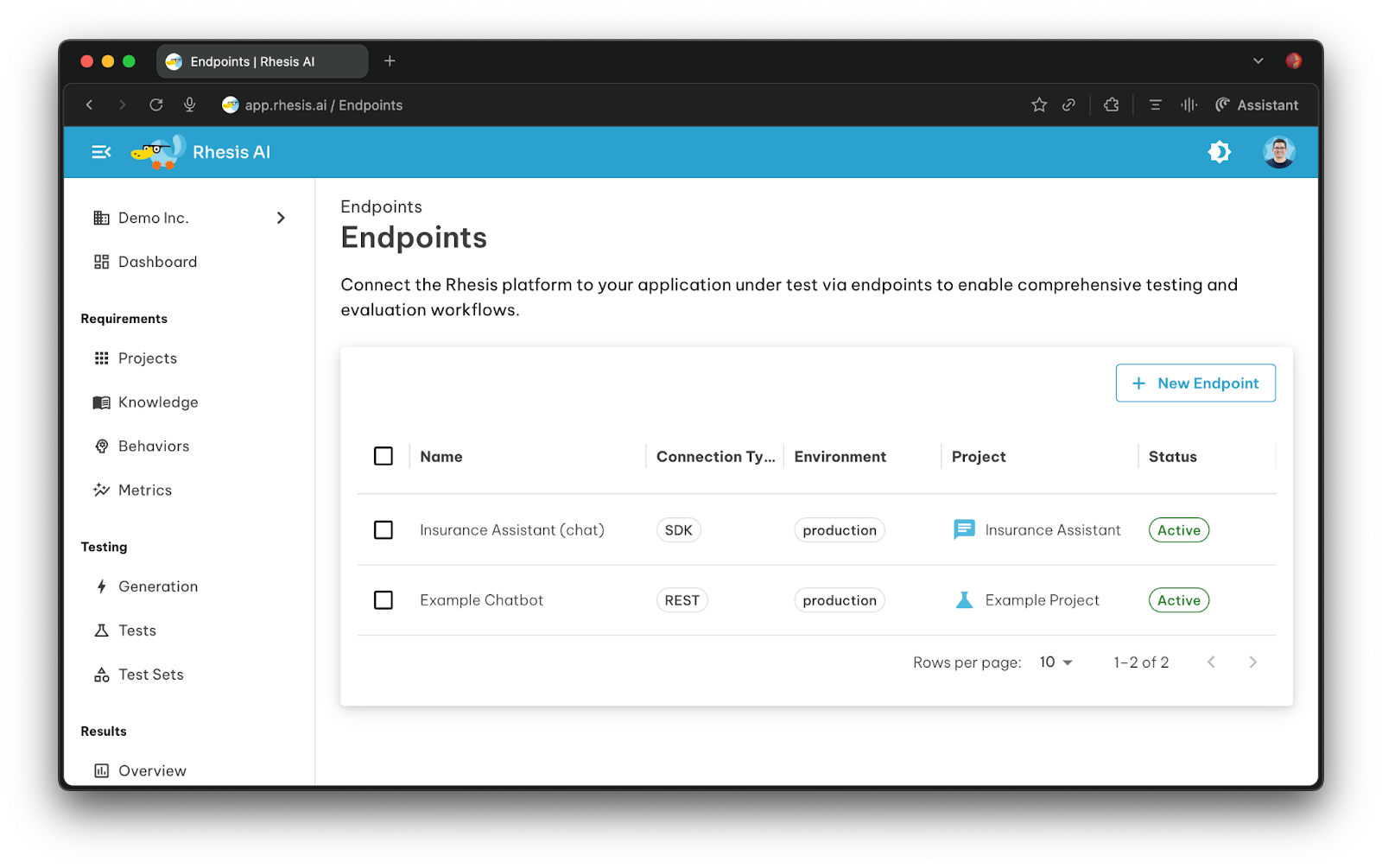

Before the connector, registering an endpoint looked like this:

It was tedious. And worse, error-prone. One typo in a JSONPath expression and the endpoint silently fails.

And most importantly, it required an available REST endpoint in the first place and if that is not already present in the application, the set-up places a burden on the team before any value could be delivered by Rhesis.

We designed the connector to eliminate all of that. A persistent WebSocket link between an SDK client and the Rhesis backend with two communication patterns:

SDK → Backend: Functions register with their schemas, declaring what's available for testing.

Backend → SDK: Execution requests trigger those functions remotely, collecting results for evaluation.

We invested a considerable amount of time on the interface. It had to be simple. A single decorator:

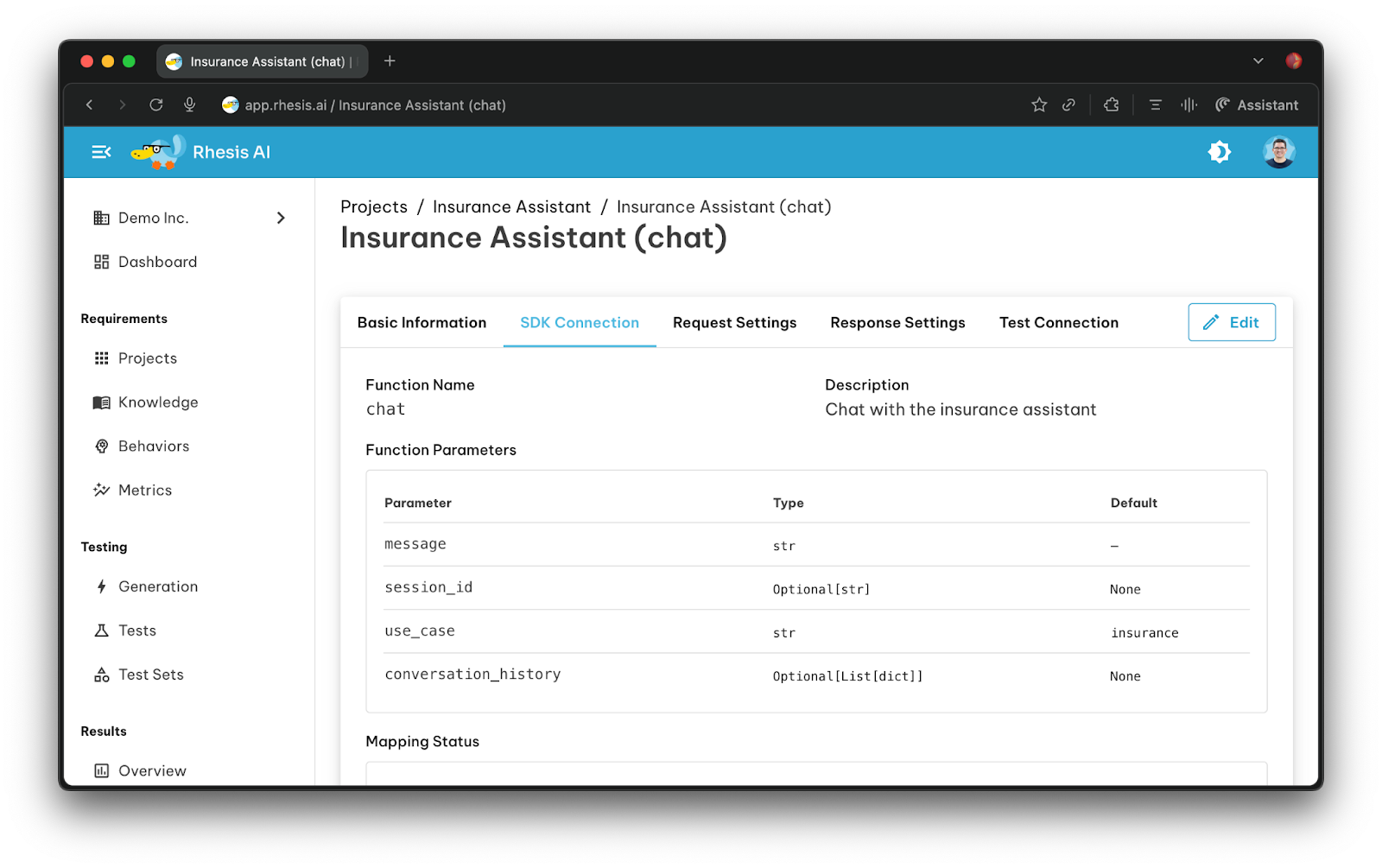

That's it. No UI configuration. No manual mappings. The decorator handles WebSocket lifecycle, function registration, execution coordination. Functions execute in their natural environment with real dependencies, not isolated test harnesses. We didn't want to force developers to change how their code worked.

Here's where it gets interesting. Rhesis expects a standardized format, containing fields like, input, session_id, context, etc. But developers name their parameters whatever makes sense for their application: user_message, conv_id, question, thread_id.

Previously, developers had to write the mappings manually. Now the connector figures it out automatically using a 4-tier approach:

user_message → input, conv_id → session_id)Most of the time, pattern matching just works. For functions with unusual naming, the LLM figures it out. And when you need precise control, you can still provide explicit mappings.

The mappings that used to require careful manual configuration with Jinja2 templates and JSONPath expressions now happen automatically when the decorator runs. We introspect the function signature, analyze the parameter names, and generate the correct mappings on the fly.

Once we had SDK-to-backend connectivity working, we could establish a connection and test endpoint connectivity. That worked great for a single test. But we needed to run hundreds of tests at once, evaluating LLM applications means testing multiple scenarios, edge cases, and conversational flows in parallel.

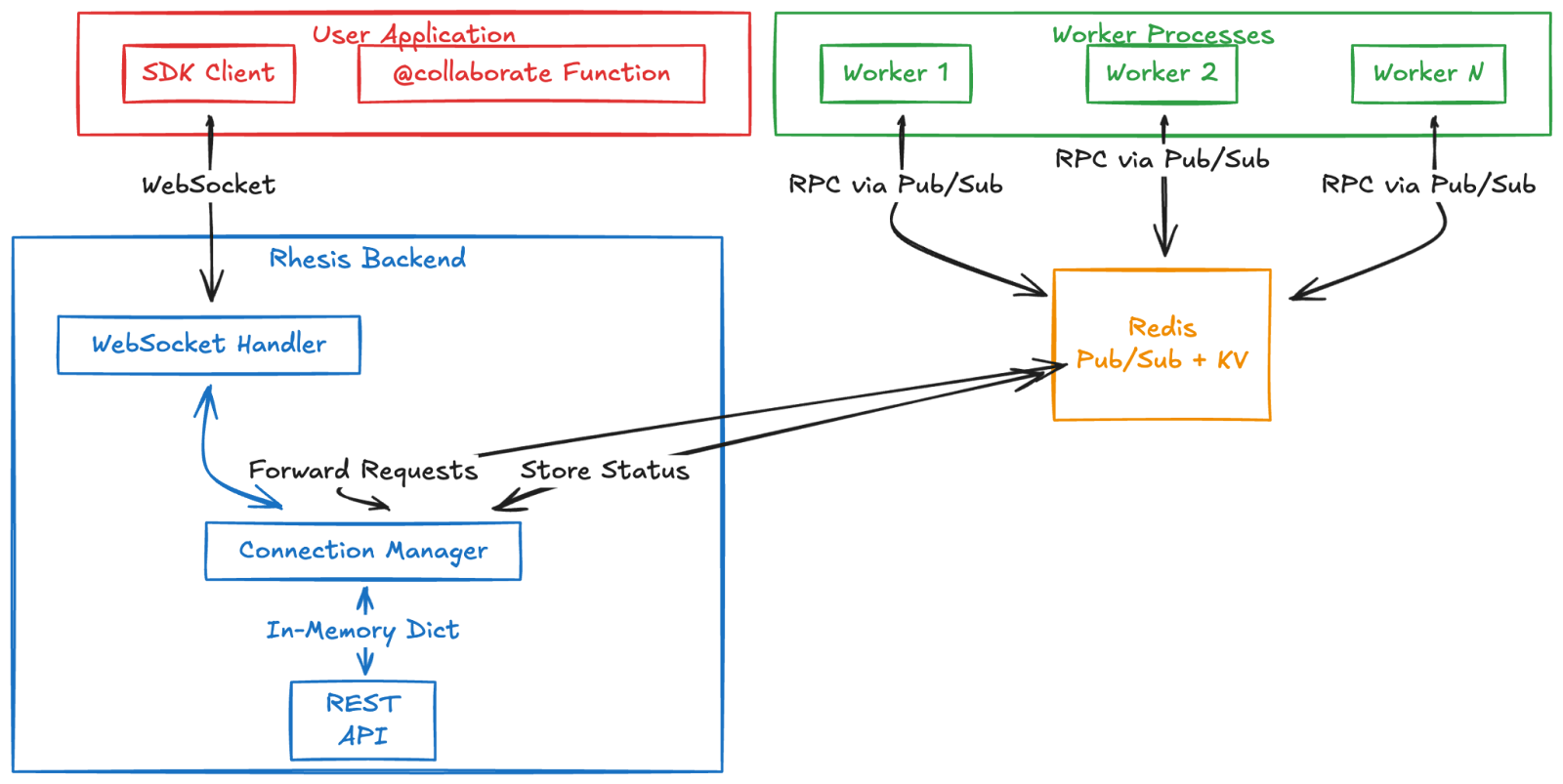

Running tests directly on the backend wasn't an option. The backend serves API requests and maintains WebSocket connections. Blocking it with test execution would kill performance.

We needed a separate component: Worker. This is a collection of Celery worker nodes that handle test execution asynchronously. You configure a test, the backend queues it, and workers pick it up and execute it in parallel. This architecture scales very well horizontally, if you need more test capacity, you can just add more worker nodes.

But this created a fundamental problem. Worker nodes are completely separate processes, often running on different machines. They have no notion of the WebSocket connections that exist in the backend. Only the backend itself has those connections. So we needed a way for workers to communicate with the backend, which in turn triggers functions in the target application via the SDK.

This is where things got interesting, and where Redis entered the picture.

The system we ended up with spans four layers working across process boundaries:

The Redis layer came later, after we discovered the hard way that workers couldn't access the backend's memory. It feels blatantly obvious now, but this was evident only later in the development cycle. The complexity was compounded by multiple backend containers in our cloud setup, each running multiple worker processes.

We debated this issue at length. The requirement was clear: trigger functions in user applications with minimal latency, potentially executing hundreds of test cases in rapid succession.

We prototyped HTTP polling first. The application asks "do you have work for me?" every 100ms. Even at that aggressive rate, we're burning 10 requests per second per connected application. And we still have 100ms of latency before execution even starts. It felt wasteful and slow.

WebSocket inverts the model. The connection stays open, we push requests when needed. Latency drops to network round-trip time, which is typically under 10ms. One connection at startup receives all work.

Trade-offs we accepted:

Benefits we gained:

For a testing platform, precise control over execution timing outweighed the operational burden. We committed to WebSocket.

With Worker handling test execution separately from the backend, we hit the core distributed systems problem. The first implementation seemed straightforward: backend stores connections in memory, workers check that dictionary.

We deployed it, connected an SDK, triggered a test from a worker. Error: "SDK client is not currently connected."

Wait, what? The SDK was clearly connected. Direct API calls worked perfectly. What was going on?

We spent quite some time debugging before it hit us, in a textbook 'duh' movement. The problem is fundamental to how operating systems work:

Worker nodes couldn't access that memory. The dictionary existed in a completely separate process's address space. Of course they couldn't see it.

Okay, Redis to the rescue. We added the connection state to Redis, deployed, tested again. Sometimes it worked. Sometimes it failed with the same error. Intermittent failures, a developer’s favorite kind of bug.

More debugging. We discovered another layer of complexity: the backend itself runs with multiple Gunicorn worker processes (typically 4+) for handling API load. These are separate from the Celery Worker nodes. They're the backend's own processes for serving HTTP requests and maintaining WebSocket connections. Each backend worker process maintains its own _connections dictionary.

In our cloud setup, we also run multiple backend containers for redundancy. So we have multiple containers, each with multiple worker processes, each with isolated memory.

The race condition was brutal:

What was happening:

The test was succeeding, but we were reporting failure. The logs showed both the error and the successful result, milliseconds apart.

Now we had a choice to make: where should we store the connection state? The memory isolation problem was compounded by multiple backend worker processes and multiple containers. This meant we needed an orchestrator such as Redis for cross-process visibility. But Redis adds latency to every lookup.

We ended up with a hybrid approach:

Each backend worker process maintains its own dictionary for fast lookups. When handling WebSocket connections or direct API calls, it checks its local dict first. For Worker nodes checking connection status or coordinating RPC, they query Redis.

Backend worker processes now check Redis before publishing errors:

This simple check fixed the race condition. If Redis says a connection exists, trust it and let the process that owns it handle the request. Only publish an error if Redis confirms the connection truly doesn't exist.

Move everything to Redis. Make it the single source of truth. But every connection lookup would hit Redis, adding 1-2ms latency and serialization overhead. For high-frequency API calls serving the REST API, that's unacceptable.

Synchronizing both stores during connect/disconnect adds complexity. But we optimize locally where possible (in-memory for same-process operations), and coordinate across processes only where necessary (Redis for cross-process communication). The hybrid approach balanced performance with distributed coordination across multiple containers and worker processes.

Once we solved the multi-process visibility problem, we had the infrastructure for workers to invoke SDK functions through the backend. Redis became our coordination layer, implementing the communication bridge between workers and the backend's WebSocket connections. We built an Remote Procedure Calling (RPC) pattern using three data structures:

ws:connection:{project_id}:{environment} → "active" (1 hour TTL)ws:rpc:requests (shared pub/sub)ws:rpc:response:{test_run_id} (per-request pub/sub)

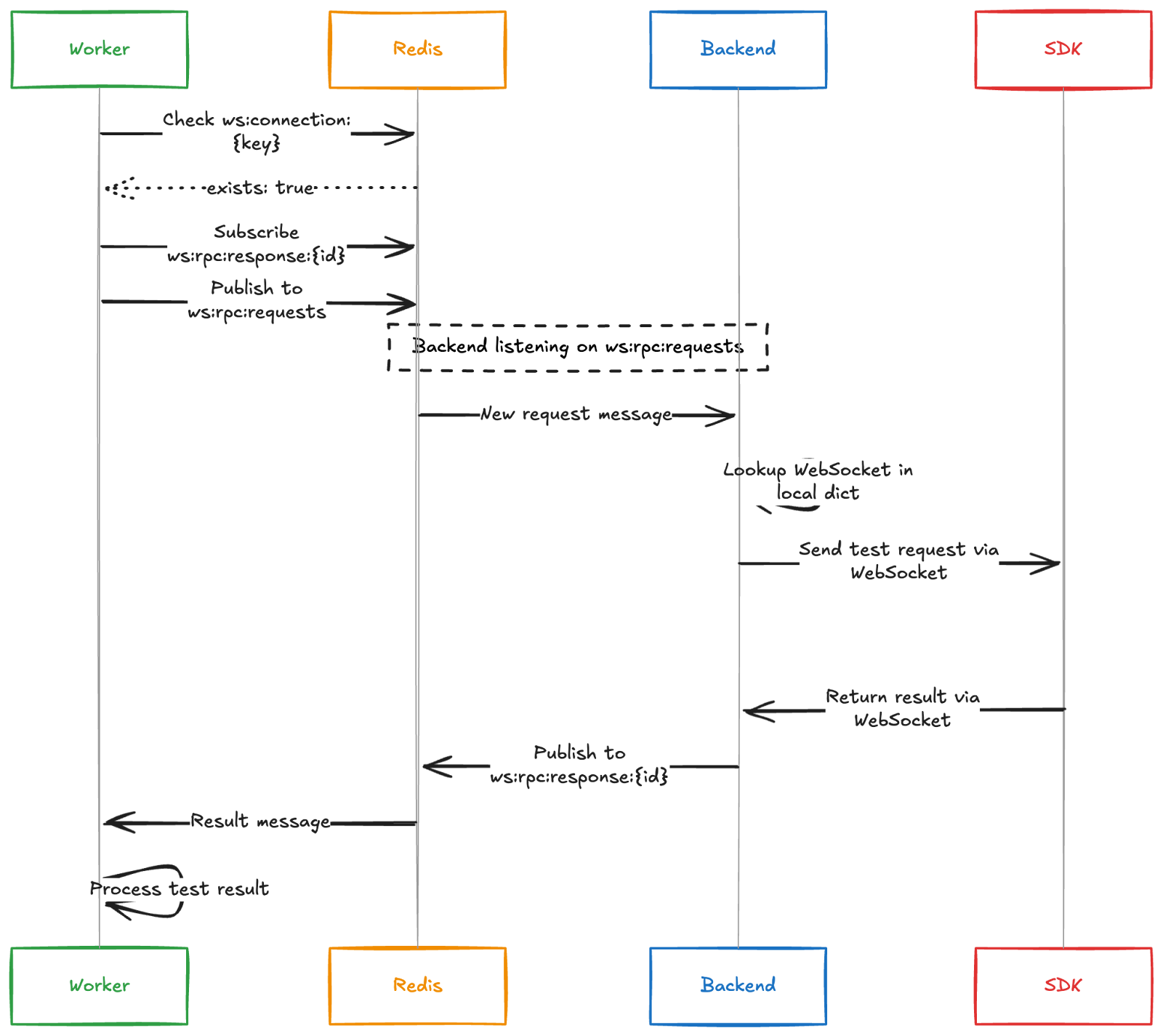

A worker verifies the connection exists in Redis, subscribes to a response channel unique to this request, then publishes the request to the shared channel. The backend runs a background task listening on ws:rpc:requests. When a message arrives, it looks up the WebSocket in its local dictionary and forwards the request through it.

The SDK executes the function and returns results through the WebSocket. The backend publishes to the response channel. The worker, still listening, receives the result and continues.

Performance: The entire round trip takes 50-200ms. Redis adds only 2-5ms overhead, the rest is function execution time. Worker nodes timeout after 30 seconds to handle slow functions or disconnected SDKs.

The beauty of the pub/sub model: workers don't need to know which backend process owns a connection. They just publish to Redis and trust that the right process will pick it up.

Here's what connecting an application looks like now:

In this example, chat is your application’s entry point for incoming user requests, i.e., how your application actually talks to the world.

Three steps. No UI configuration. No manual mappings. Compare this to the old process: open UI, create endpoint, write Jinja2 templates, write JSONPath expressions, debug mapping errors. We went from a multi-step error-prone process to three lines of code.

In the screenshot below, you can see the new chat endpoint registered with the connection type SDK, living side-by-side with REST-type endpoints. In the details page, you can see that the function parameters have been mapped automatically.

Building the connector for testing opened an unexpected door.

We realized the WebSocket channel we built could be used in more than one way. The same persistent connection that carries test requests could transport traces, metrics, and logs from production traffic. We're already serializing execution data, already maintaining the connection, already handling authentication. The infrastructure is sitting there.

Adopt OpenTelemetry semantic conventions directly. When a decorated function executes, whether triggered remotely by a test or called locally in production, it generates a span. That span flows through the WebSocket with execution metrics: time, token usage, cost. The backend correlates test results with production traces, showing how the same function behaves under testing versus real traffic.

We built this to solve endpoint registration and testing. But we accidentally created infrastructure for comprehensive instrumentation. The connector that started as a convenience for developers could become their observability pipeline too. Same channel, dual purpose. It feels elegant.

We set out to eliminate tedious endpoint configuration. What we built was a bidirectional connector that automatically maps function signatures, coordinates across distributed processes, and scales test execution.

The automatic mapping generation turned minutes of manual Jinja2 and JSONPath configuration into milliseconds of pattern matching with LLM fallback. The distributed coordination challenge (enabling Worker nodes to trigger functions through WebSocket connections in separate backend processes) forced us into Redis pub/sub RPC with hybrid storage. We optimize locally where possible, coordinate across processes only where necessary.

The patterns we implemented, lazy initialization, hybrid storage, pub/sub RPC, apply broadly to distributed systems where components must coordinate without shared memory.

What started as easier endpoint registration became infrastructure for bidirectional control. And it opened an unexpected door: the same channel can handle observability data, turning a testing connector into the foundation for a comprehensive instrumentation platform.