Artificial Intelligence (AI) is transforming numerous sectors, profoundly impacting task performance and decision-making processes. However, as AI's prevalence increases, so does the need for trustworthiness, i.e., ensuring that AI applications operate as intended and meet required quality standards. As such, trustworthiness is key not only for user confidence but also for ethical, legal, and operational reasons. Quality Assurance (QA) is an integral part of achieving trustworthy AI. This blog post explores why QA matters in ensuring trustworthy AI applications and agents.

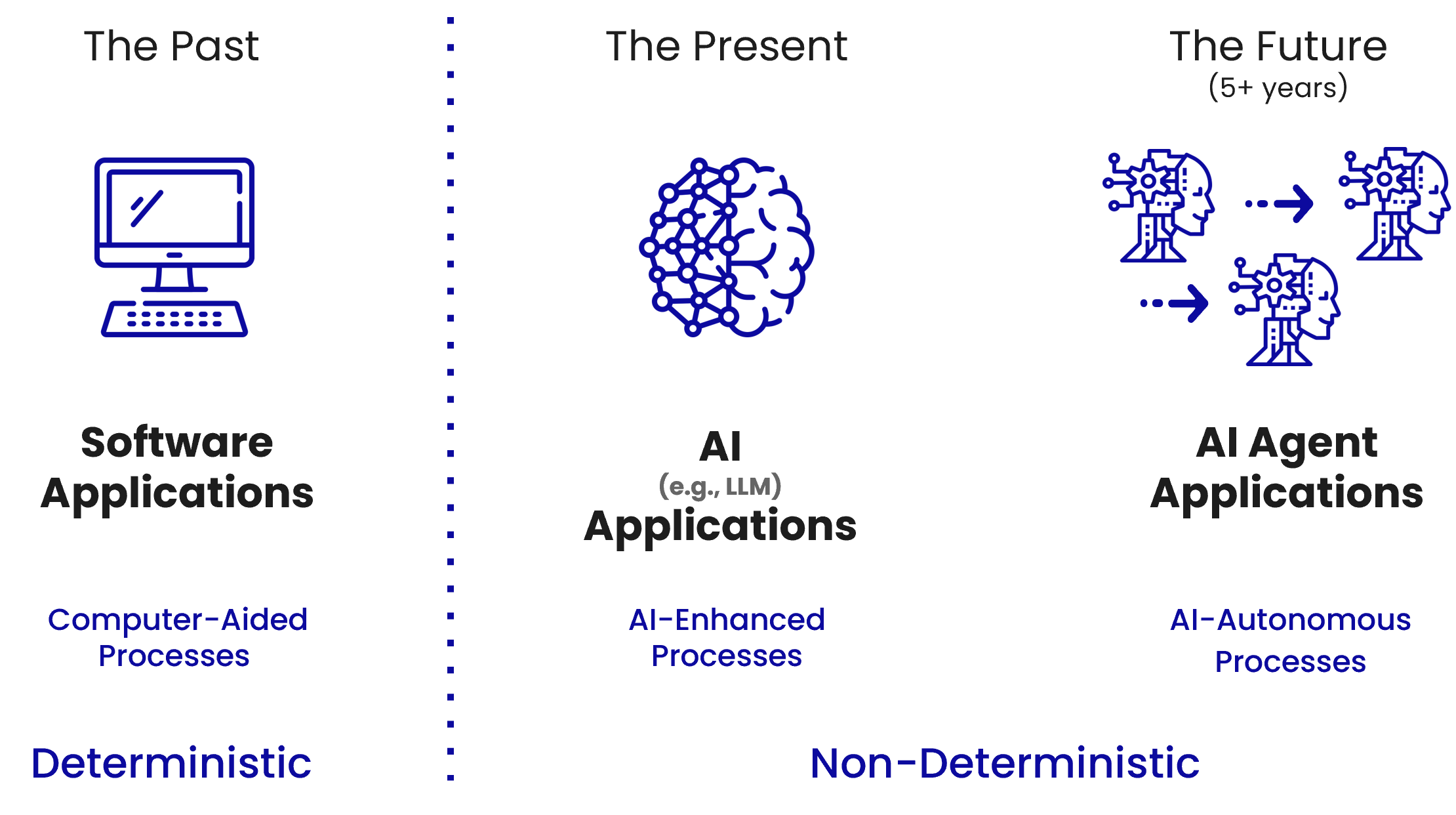

QA is a systematic process designed to determine whether a product or service meets specified requirements. In traditional software, QA involves techniques such as bug detection, continuous integration/continuous deployment (CI/CD) tools, and various testing methodologies (e.g., regression testing, unit testing). However, AI applications, such as those based on large language models (LLMs), present unique challenges that traditional QA methods cannot fully address. AI applications often behave non-deterministically, meaning the same input can produce different outputs. While there are approaches to increase the probability of the deterministic outputs, some degree of non-deterministic behavior is unavoidable, introducing uncertainties and potential biases that are difficult to detect.

Furthermore, given all the different parameters that can be tuned to produce a given output, developing AI applications is a complex, inherently iterative process. These parameters range from basic model parameters such as temperature, frequency and presence penalty, top-p, all the way to system prompt design and document retrieval architectures, each with their own particular design choices. This complexity demands robust QA processes tailored to AI's unique characteristics. As AI applications are increasingly being integrated into core business processes as autonomous agents, the behavior of the overall system (AI interacting with non-AI components) needs to be evaluated, because, unlike traditional software, AI applications process inputs in various ways and can produce unforeseen outputs. Ensuring quality in such dynamic environments requires ongoing QA processes. Moreover, AI applications can significantly impact individuals and society, necessitating QA practices that address ethical considerations such as fairness, transparency, and accountability.

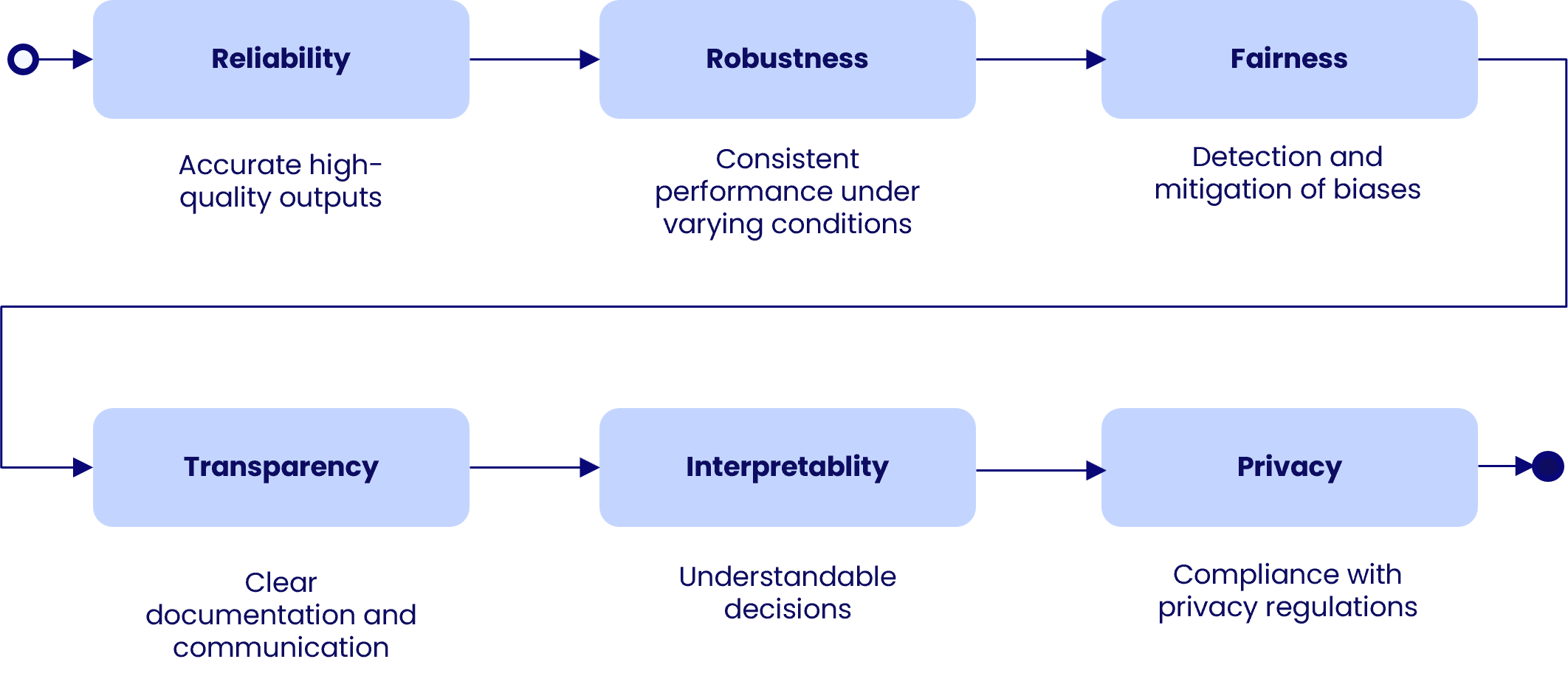

Achieving trustworthy AI involves focusing on several key aspects. Reliability ensures that AI applications produce accurate and high-quality outputs across diverse scenarios. Another key aspect is robustness, which refers to the AI system's ability to maintain performance under varying conditions, including adversarial inputs and unforeseen data patterns. These properties together ensure that the AI system can handle real-world variability and other challenges. Fairness is critical to prevent discriminatory outcomes, therefore, QA processes must include methods to detect and mitigate biases in AI predictions. Regular audits and bias assessments help maintain fairness throughout the AI lifecycle, ensuring equitable treatment for all users. Furthermore, transparency involves clear documentation and communication about how AI applications make decisions, which fosters user trust and supports regulatory compliance. Similarly, interpretability ensures AI decisions can be understood and explained, which is important for debugging, regulatory purposes, and building user trust. Finally, privacy considerations must be carefully considered, especially when AI applications handle sensitive personal data. Consequently, QA processes must ensure the AI application’s compliance with privacy regulations like GDPR and protect user data from misuse.

The QA process for AI applications involves several steps to ensure that the application not only meets its defined requirements but also performs effectively and fairly in real-world scenarios. This process covers everything from defining clear requirements and validating model suitability, to rigorous testing, ongoing maintenance, and ensuring user satisfaction. Each step is designed to address specific aspects of the application’s development and deployment, ensuring that it functions reliably, securely, and equitably.

The first step involves specifying clear, measurable requirements for the AI application. This includes defining acceptable performance levels, fairness criteria, and security measures. Stakeholders from various domains, such as technical, legal, and ethical fields, should be involved to ensure comprehensive requirement gathering. Additionally, it's important to validate the suitability of any pre-trained models being used, ensuring they are appropriate for the specific use case. While ChatGPT is often the model of choice, other options should also be considered, such as Small Language Models (SLM’s).

Once the requirements are defined, the next step is to ensure that the AI system integrates seamlessly with existing software and workflows. This involves testing the APIs, data ingestion processes, and the utilization of outputs by downstream systems. Performance testing is also conducted at this stage to assess how the AI application performs under various conditions, including different load levels and stress scenarios. This helps identify any scalability issues and performance bottlenecks.

Engaging end-users in the testing process is necessary to identify usability issues and ensure the system meets user expectations. During this step, users interact with the application and provide feedback on its functionality and ease of use. This feedback is then used to refine and improve the application before it is fully deployed.

Evaluating the application for potential security vulnerabilities is critical. This step involves checking for data breaches, unauthorized access, and other security threats, as well as ensuring compliance with relevant data protection regulations. Additionally, it is important to continually assess and mitigate any biases in the AI system. This involves analyzing model outputs across different demographic groups to ensure equitable performance.

After the AI application is deployed, ongoing monitoring is necessary to ensure it continues to perform well and to catch any new issues that may arise. These issues might include vulnerabilities gone undetected, as well regular updates of the model being used and/or changing requirements. Throughout this process, thorough documentation and reporting are maintained to ensure transparency and accountability, allowing stakeholders to understand the system’s performance and areas for improvement.

QA for AI should integrate seamlessly with the overall AI development process, involving QA from the initial design phase through to deployment and maintenance. A successful approach ensures quality considerations are embedded throughout the AI lifecycle. Effective QA requires collaboration between data scientists, engineers, domain experts, and ethicists. Cross-disciplinary teams bring diverse perspectives and expertise to the QA process. Regular communication and collaboration ensure all aspects of AI quality are comprehensively addressed.

QA for AI is not a one-time activity but an ongoing process. Continuous and automated testing through dedicated platforms is essential. AI testing platforms ensure past test experiences and adaptive testing are considered during each iteration, focusing on new challenges and attack patterns. This continuous assessment cycle keeps AI applications up to date with evolving standards and user needs.

In the journey towards trustworthy AI, QA plays a decisive role. By addressing the unique challenges posed by AI applications and focusing on key quality aspects, QA ensures AI applications and agents perform reliably, ethically, and securely. Implementing a dedicated QA testing platform tailored to a business's AI needs and specific use cases not only reduces testing efforts but also enhances confidence and drives responsible innovation and adoption.

By embedding QA into every stage of AI development, from initial design to deployment and beyond, organizations can ensure their AI applications are robust, fair, transparent, and secure. This comprehensive approach to QA is essential for maintaining user trust and achieving AI's full potential in transforming industries and improving lives. As we advance in this AI-driven era, the commitment to quality assurance will be the cornerstone of trustworthy AI, ensuring the technology serves humanity positively and equitably.