Testing AI applications means learning a whole new vocabulary. Some terms come from the broader world of AI testing: words like "prompt," "hallucination," and "ground truth" that you'll encounter whether you're using Rhesis or another testing framework. Others are specific to how Rhesis organizes and runs tests.

Some terms in Rhesis aren't immediately obvious. What's the difference between a metric and a behavior? Why do I need to set up an endpoint before running tests? We built our new glossary to answer exactly these questions. This post walks you through the essential terms you need to know, split into two parts: AI Testing Essentials and Rhesis-specific vocabulary (Link to full glossary below).

Testing an LLM or agentic application requires a shift from deterministic validation (asserting that Input A always equals Output B) to probabilistic evaluation. In this paradigm, we measure the statistical likelihood that a response is semantically and contextually correct.

To build a production-ready evaluation pipeline, you need to understand the four pillars of the AI testing stack.

The complexity of your test depends on the depth of the interaction.

In AI, "correct" is rarely a 1:1 string match. Instead, we use technical proxies to measure accuracy:

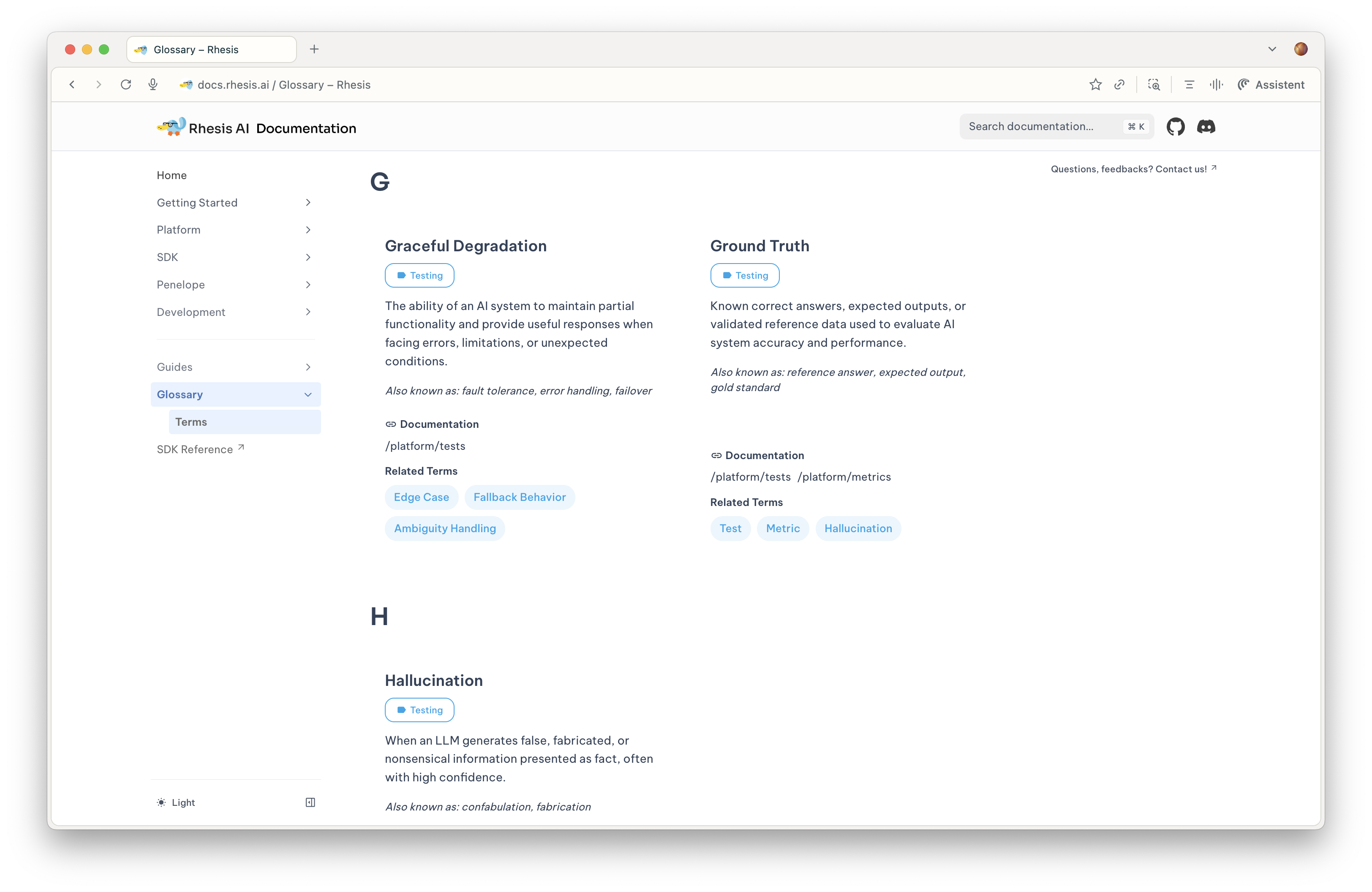

Ground Truth: A curated dataset of "gold standard" responses used as a benchmark. It acts as the anchor for measuring an AI's performance.

Knowledge: Domain-specific source materials (documentation or FAQs) used to provide context. In Retrieval-Augmented Generation (RAG), this is the data the AI must use to ground its answer.

Baseline: A reference point established from initial test results. It serves as a benchmark for comparing future performance and detecting Regression (when a new version of your model performs worse than the previous one).

Manual review is the bottleneck of AI development. To scale, we use an Evaluation Pipeline where a high-reasoning model (the "Judge") inspects the application's output (see our dedicated blog post on LLM-as-a-Judge)

Evaluation Prompt: A specialized system prompt that defines the rubric for the Judge. It includes the scoring logic and specific criteria the AI must meet.

Chain-of-Thought (CoT) Reasoning: A technique where the Judge model is instructed to generate its internal "reasoning trace" before outputting a final score. This makes the evaluation transparent and helps engineers debug why a test failed.

Metrics (Categorical vs. Numeric):

Technical testing is designed to identify and mitigate non-deterministic "bugs" unique to LLMs:

Hallucination: When the model generates a stochastic output that is factually false or not grounded in the provided source context.

Prompt Injection: An adversarial attack where a user inputs instructions designed to bypass the AI's System Guardrails (e.g., "Ignore all previous instructions").

Context Window Saturation: A failure where the input is so long that the model "forgets" the middle of the text or loses track of its primary objective.

Now let's talk about Rhesis-specific terminology. This is where things can get confusing, so we'll break down the structure from the top down.

Organization → Project → Endpoint

Your Organization is your company's workspace in Rhesis: everything lives under this umbrella. Within an organization, you create Projects for each AI application you're testing. Think of a project as a dedicated testing environment: "Customer Support Chatbot v2" or "Marketing Copy Generator."

Here's the one that confuses people most: Endpoints.

An Endpoint is a complete configuration for connecting to your AI service or API. It defines how Rhesis sends test inputs to your application and receives responses back for evaluation. You configure it once (with your API URL, authentication, request format, and response mappings), then use it across hundreds of tests.

When you're evaluating AI responses, Rhesis uses a hierarchy for quality measurement:

A Metric is a specific, quantifiable measurement. Rhesis uses LLM-based metrics to evaluate responses. You can use either off-the-shelf metrics from frameworks like Deepeval, Ragas, or Rhesis-specific metrics, or create your own custom metrics. A metric might check for “Answer Relevancy” or “Jailbreak Detection”, returning a categorical or numeric score, along with a reasoning for it.

A Behavior is an expectation of how your AI should perform. A behavior groups together one or more metrics that collectively evaluate that expectation. For instance, you might have a behavior like “Compliance” with related metrics such as “Answer Faithfulness" or “Contextual Coherence”.

Test → Test Set → Test Run

A Test is a single prompt with expectations about how the AI should respond. It contains your input, any expected outputs or behaviors, and metadata.

A Test Set is a collection of related tests you run together

A Test Run is what happens when you execute a test set against an endpoint. Rhesis sends all the prompts, collects responses, scores them against your metrics, and gives you results.

This post covers the essentials, but there's more to discover. Our complete glossary includes definitions for assertions, datasets, confidence scores, drift detection, and all the other terms you'll encounter while testing AI applications.

Check it out at docs.rhesis.ai/glossary, and if you're ready to start testing, head to app.rhesis.ai to get started.

Have questions about any of these terms? Found something confusing in the glossary? Let us know: drop a message on Discord or send us a message, we're always improving our documentation based on your feedback. Building with conversational AI? Checkout our repo.